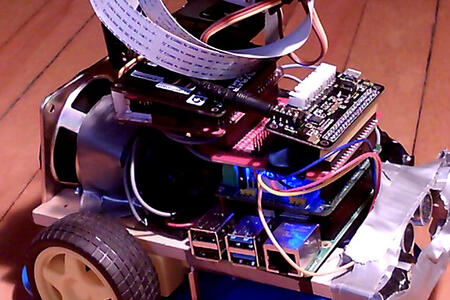

The PI Robot Companion

A companion robot for the pandemic

The goals of the robot were:

Primarily the robot needed to be autonomous and off the grid.

To this end, all of the web based solutions were eliminated. This was in a sense a relief as the choices were mild boggling and truly amazing.

Speech Recognition

I tried a variety of speech recognition solutions . I found Rhasspy useful for evaluating the available options, however, I finally came up with a home grown integration after a lot of experimenting. Even so, there is still room for improvement.

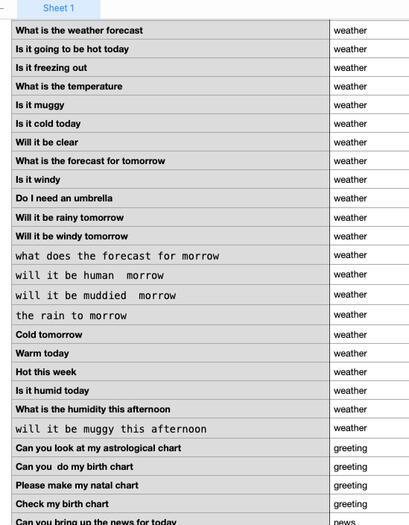

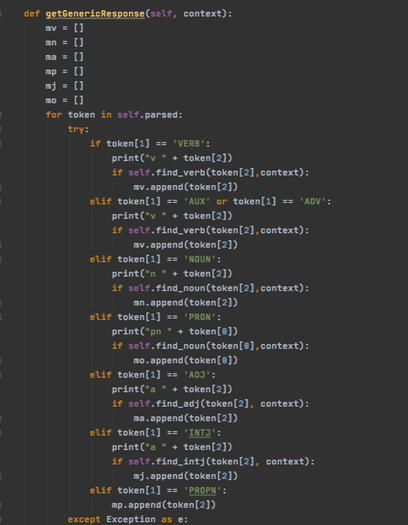

Context and Intents Engine.

Here too I evaluated and tried a few before realizing that in Spacy there was already a ‘sufficient for the purpose’ pre-built model that I could use to evaluate sentences and classify them. So for example I feed the engine a series of sentences to do with playing music which contain its actions, such as, forward, reverse, etc. The engine uses Spacy to analyze these sentences and store all found objects, classified, in a mysql database. So when the robot encounters a sentence with a series of corresponding actions it weighs them to see what the most likely context is.

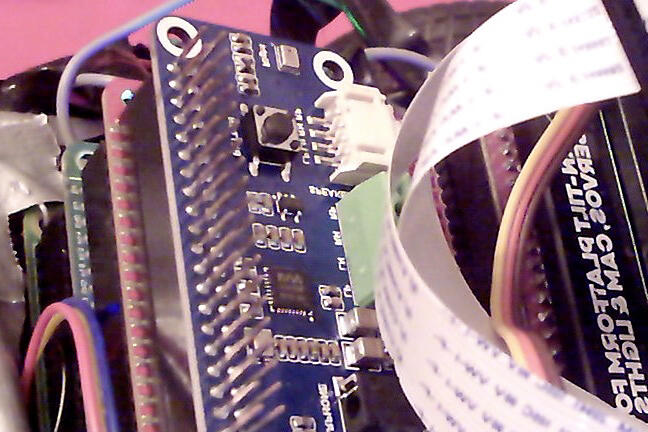

Multi Threading and Multi Processing

I found out right in the early stages that my usual ‘path of least resistance’ would not work for this project. So I spent some time preparing the foundations. So now it is relatively easy to add another context and intents within them. There is a class that deals with the contexts and it passes along all matching intents to the corresponding intents class which actually deals with what to do.

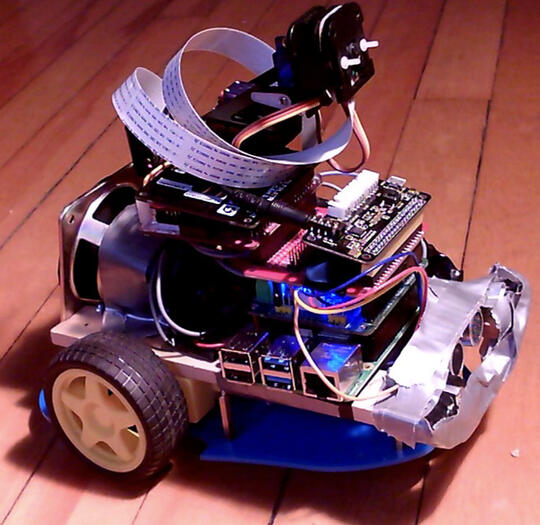

Also, because of a multi threaded foundation using queues for communication, I was able to program the robot to play music and follow me around.

Multi processing wise, when the robot meets a new person, it takes a series of photos and processes them in the background, so as to not slow itself down.

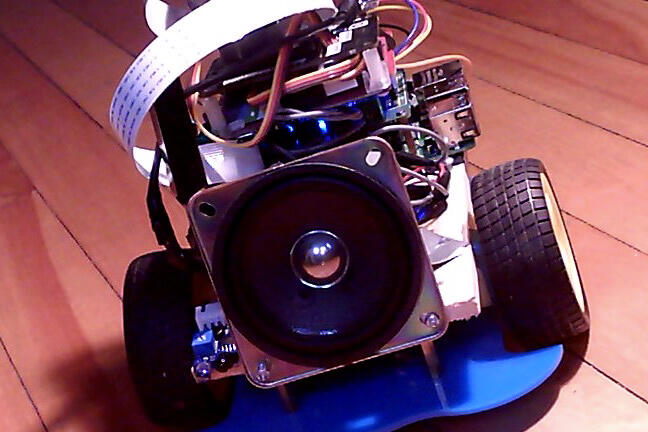

Speech

This was also a challenge as the basic voices on all the on-device TTF engines sounded terribly robotic. http://espeak.sourceforge.net/mbrola.html Mbrola UK English voice was the most acceptable one to date

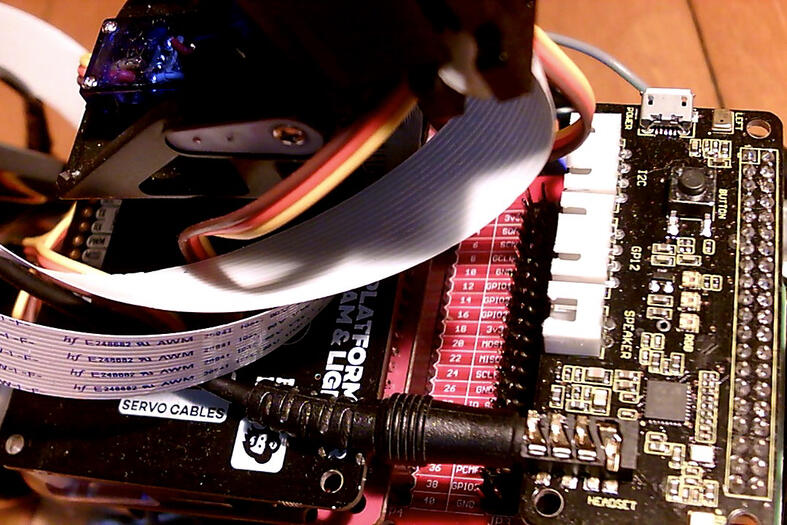

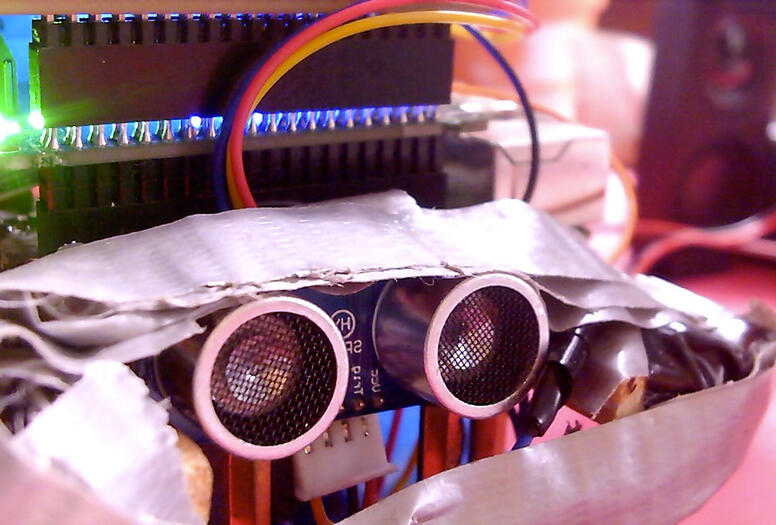

Object and People Detection

This can be a chapter on its own. In extreme brief. Compiled openCV using all 4 cores for best results. After that, it is about experimenting with and implementing HaarCascades , Tensorflow and coming up with a variety of tricks on Opencv. In the group photo function, the robot looks for a person and then measures the distance of the top of their head to the top frame and adjusts (tilts) the camera for it to be about 60 pixels.